Detecting File Leak in the Kotlin Daemon

File handle leaks are notoriously difficult to debug, so much so that most of the “fixes” for them are “increase the file descriptor limit”. However, this is not a fix. This is as close as you can get to covering your ears and closing your eyes, then screaming “I CAN’T HEAR YOU” to a bug. The file handle leak will still exist, but now it’s ignored.

In this case study, I decided to take the approach of finding out why the leak was occurring, and finding the best fix for it.

Signs of a File Handle Leak

The hardest part of debugging an issue is noticing it to begin with and file handle leaks are very hard to notice until an error is thrown, and even then it may not be noticed since the error could be handled by some logic that changes the exception cause or swallows it altogether. So how did I notice the one in the Kotlin daemon? Dumb luck. I had been tracking another file handle leak in the Gradle daemon, and just got curious about how many files remained open in the Kotlin daemon after compilation.

There are some signs that your application may have a file handle leak, but even these signs are easily missed. A few common signs are:

- Errors relating to files unable to be created

- Any error about a file being deleted (especially on Windows)

- Unable to open files for any reason

- Files having garbage data written or being corrupted

These issues don't always appear on every run and could be intermittent as well.

Noticing the Kotlin Daemon File Handle Leak

Going back to how I found the Kotlin daemon file handle leak, as I had mentioned previously, I found it due to curiosity and dumb luck. When Paul Klauser (https://github.com/PaulKlauser) reported a metaspace leak occuring in the Kotlin daemon, I was curious if this metaspace leak was potentially due to a file handle leak, as I had been tracking one in the Gradle daemon (which I still haven't found).

To go over how I did this at a high-level (more details later on), I attached Jenkin's file-leak-detector to the Kotlin daemon and ran ./gradlew clean assembleDebug --rerun-tasks several times, capturing the output of the file leak tool and using a post-processor (see https://github.com/centic9/file-leak-postprocess) to make the logs easier to read. I ended up with a file containing many stack traces that show where the file was opened. Many of them looked like the following (shortened for easy reading):

So what's going on here and how does this tell the story of a file leak?

Well, this stacktrace actually represents 417 files that were opened and never closed when the Gradle task was completed, but for brevity, I only listed the final three files left open (lines 3-5). All these files were opened at the ZipFile constructor (line 6), however that is not where the origin of the file was at. It actually originated elsewhere in this stacktrace, which required some investigation. Also, this is just one stack trace for the given set of files. There were 1380 files left open at the end of the Gradle task run, and about another 1k extra files were opened after each run. In the following sections, I'll get into the details of how I debugged this file leak and found the fix for it.

Setting Up the file-leak-detector

To begin with, I downloaded the file-leak-detector jar, and in the root gradle.properties file of the NowInAndroid repo, I appended the following line to the JVM args of the Kotlin daemon:

kotlin.daemon.jvmargs=<other args> -javaagent:/path/to/file-leak-detector-jar-with-dependencies.jar=http=19999

What this does is attach the file-leak-detector Java Agent to each Kotlin daemon that is launched. Since I only want one Kotlin daemon running, I made sure to kill the other Kotlin daemon processes before running the Gradle command.

The flag http=19999 creates a local web server on port 19999 that the file-leak-detector uses to provide a list of all the open files and stack traces to them. This is important as the Kotlin daemon is a long lived process, so it doesn't exit when the build has completed.

Capturing the File Handle Leak

Getting this file handle leak to occur was a bit tricky. I had to determine the task that would trigger the Kotlin compiler, and ensure that consecutive executions were re-run properly. For this reason I chose to run ./gradlew clean assembleDebug --rerun-tasks in order to ensure that the compilation occurred each time.

So what does each part do?

cleanensures that no generated code exists, and gives me a clean build directory.assembleDebugtriggers the compilation of the Android app in NowInAndroid.--rerun-taskstells the Gradle daemon to ignore any up-to-date checks from tasks and rerun them all.

After the first successful build of NowInAndroid using the above command, I was able to go to localhost:19999, which outputs all the open files left on the Kotlin daemon. That's where I got my first indication that something may be wrong, as I was left with 1380 file handles left open. This didn't necessarily mean there was a file leak, as these open file handles could be cached. To be sure, I stored this result as a text file, and re-ran the Gradle command while making sure the Kotlin daemon was not restarted or killed.

The next run left me with 2694 open file handles, with each run opening another ~1k files. This was obviously a leak. I stored the results of each run in a text file for post-processing, to make it easier to read.

Analyzing the Results

The file-leak-postprocess tool is useful as it gets all the open file handles that share a similar stacktrace and groups them together (see output above). I ran this post-processor tool for each output I collected, and took a quick look to see where most of these files were being opened. Visual Studio Code does a great job visualizing which stack traces have the most open file handles.

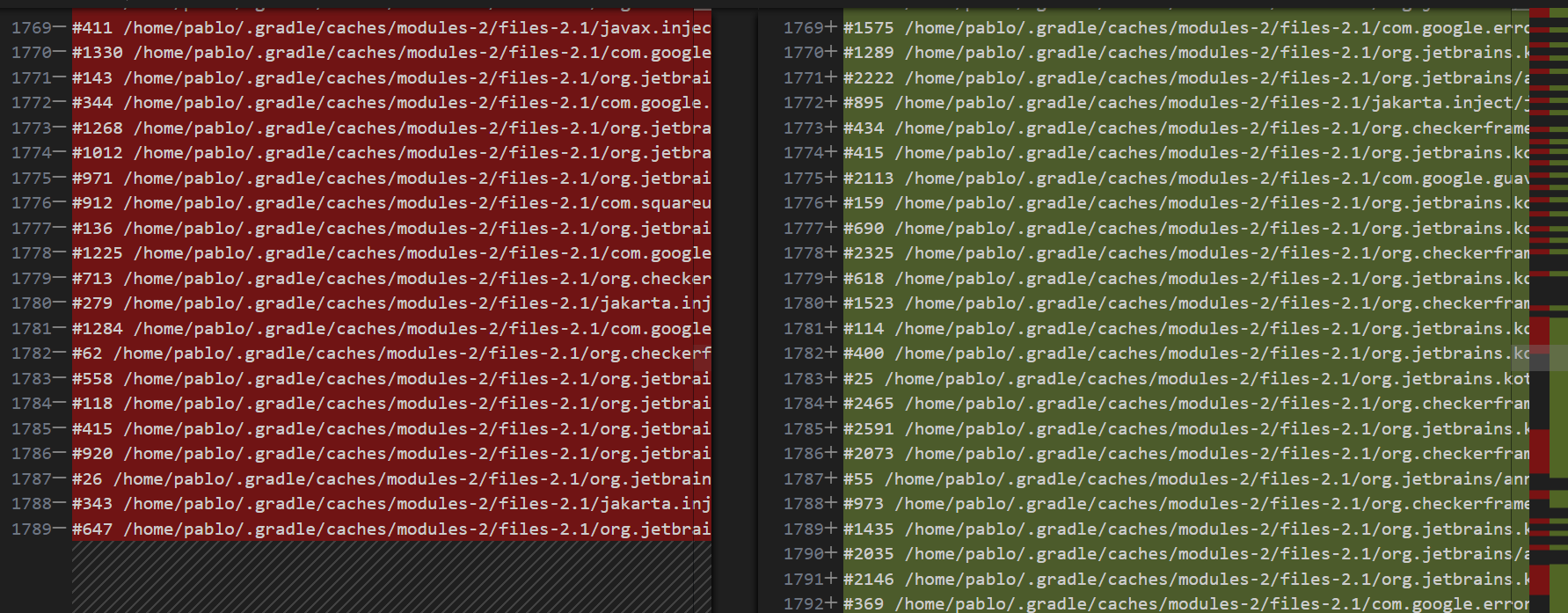

With this, I saw that there was a cluster of open files that shared common stacktraces. What I needed to identify was which of these grew on consecutive runs, as that would determine the location of the leaks. Again, Visual Studio Code came handy with their "Compare Selected" option when 2 files are selected in the file navigation. However, this comparison was a bit confusing to me at first.

Typically, I would only see new files added to specific stack traces that pointed out the leaks, yet here we had the previous files being closed and an increasing number of new files being opened. At first, I believed this to be due to a collection that was growing after each run, however I was not able to find any evidence to support this theory. The next approach I took was looking at the stacktraces and understanding a common root for them all. Thankfully, I had Jason Pearson in a Slack thread helping me out identify this common root, which he pointed out to be org.jetbrains.kotlin.cli.jvm.K2JVMCompiler.doExecute(K2JVMCompiler.kt:43).

Now that I knew the general area of where the file leaks were occurring, I was able to dig a bit deeper to understand the root cause, and discovered that both the Kotlin source code and KSP source code were creating URLClassloader objects, but never closing them. By default, URLClassloader caches the underlying .jar files being opened, and keeps them open until the URLClassloader has closed or has been garbage collected (see: Closing a URLClassLoader). With this finding, I filed a bug with the Kotlin team and with the Google/KSP team.

Patching the File Handle Leaks

The fix for these file leaks was rather easy for KSP. I just needed to call URLClassloader.close(). This was quickly achieved in this PR. Testing was a bit more difficult, but after working with the KSP team, I was able to test a local build of it on NowInAndroid and verified the file leaks for KSP were gone!

As for the Kotlin project fix, I made an attempt at a fix, but it turned that it would require quite a bit more effort. Essentially, there were many paths to open a URLClassloader, and my PR didn't cover all of those. Thankfully, Brian Norman on the Kotlin team took the charge on this fix!